When it comes to big data processing, selecting the right file format is crucial. The file format chosen affects the performance, storage, and processing of the data. Four popular file formats for big data storage and processing are ORC, RC, Parquet, and Avro. In this blog, we will compare these file formats, their advantages and disadvantages, and which one is best suited for different use cases.

ORC (Optimized Row Columnar)

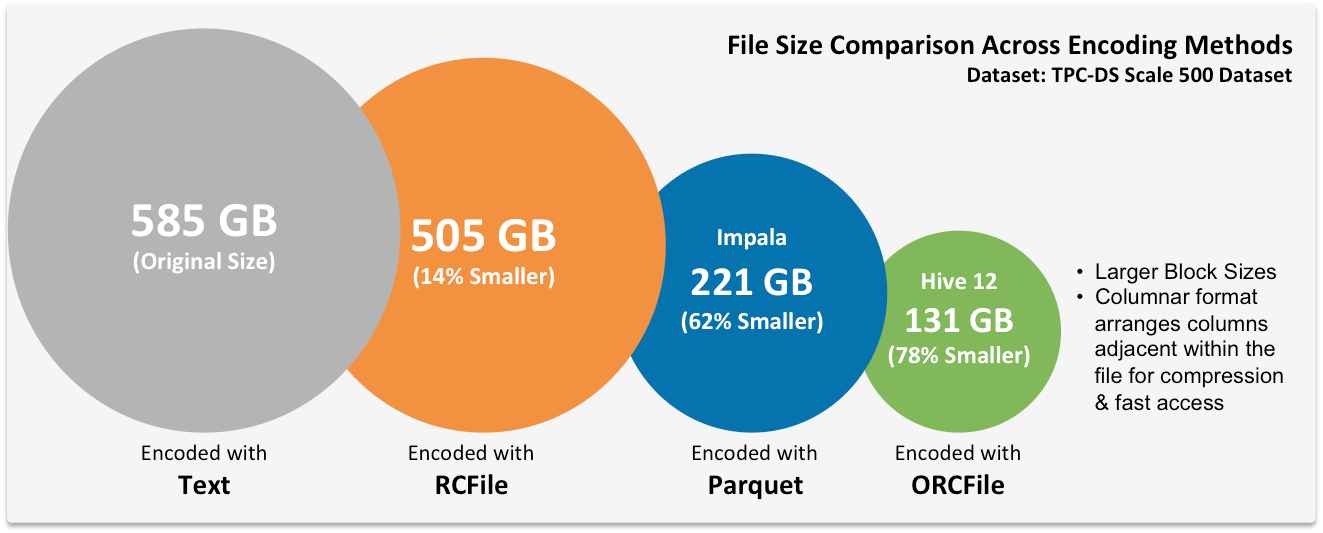

ORC is a columnar file format that is used in Hive, a popular SQL-like interface for Hadoop. ORC has excellent compression capabilities and is ideal for storing large data sets. It can compress data to a much smaller size compared to other file formats like RC and Avro. ORC also supports predicate pushdown and lightweight indexing, which improves query performance.

Advantages

– Good compression rates, smaller file sizes

– Support for predicate pushdown and lightweight indexing

– Suitable for large data sets

Disadvantages:

– Limited support for non-Hadoop environments

– Not as widely supported as other file formats like Parquet

Use cases: ORC is best suited for use cases that require storing large amounts of data with high compression rates and querying that data frequently.

RC (Record Columnar)

RC is a row-based file format that is commonly used in Hadoop. It has a simple and efficient structure that supports fast read and write operations. RC is designed to support large data sets and has good compression capabilities.

Advantages

– Fast read and write operations

– Good compression capabilities

– Support for large data sets

Disadvantages

– Not as efficient for analytical processing as columnar file formats

– Limited support for nested data structures

Use cases: RC is best suited for use cases that require fast read and write operations, and support for large data sets, such as data warehousing and data lake storage.

Parquet

Parquet is a columnar file format that is becoming increasingly popular in the big data world. It is designed to be highly efficient for analytical processing and supports nested data structures. Parquet also has excellent compression capabilities, which results in smaller file sizes.

Advantages

– Highly efficient for analytical processing

– Supports nested data structures

– Good compression capabilities

Disadvantages

– Slower read and write operations compared to row-based file formats

– Limited support for non-Hadoop environments

Use cases: Parquet is best suited for use cases that require efficient analytical processing and support for nested data structures, such as machine learning and data analytics.

Avro

Avro is a row-based file format that is designed to support schema evolution. This means that data can be added or removed from the file format without breaking compatibility. Avro also supports a variety of data types, including primitive types, arrays, and maps.

Advantages

– Supports schema evolution

– Supports a variety of data types

– Easy to read and write

Disadvantages

– Slower read and write operations compared to columnar file formats

– Limited support for nested data structures

Use cases: Avro is best suited for use cases that require schema evolution and support for a variety of data types, such as data integration and log storage.

Conclusion

| Feature | ORC | RC | Parquet | Avro |

| Data Format | Columnar | Row-based | Columnar | Row-based |

| Compression | Built-in compression | External compression | Built-in compression | Built-in compression |

| Splittable | Yes | Yes | Yes | Yes |

| Schema Evolution | Limited | No | Yes | Yes |

| Data Types | Supports complex data types | Supports simple data types | Supports complex data types | Supports complex data types |

| File Size | Small | Large | Small | Large |

| Performance | High | Low | High | High |

In summary, ORC, RC, Parquet, and Avro all have their own strengths and weaknesses, making them valuable for big data processing. ORC and Parquet provide optimal performance and efficient compression and schema evolution in distributed systems like Hadoop and Spark. RC and Avro are more versatile and can be used in various programming languages and environments.

Choosing a data format for big data processing depends on specific use cases. If the use case involves processing large volumes of data in a distributed environment, then ORC or Parquet would be the better choice. If the use case involves working with multiple programming languages or environments, then Avro would be a more versatile choice.

It’s also important to consider the trade-offs between performance and storage when choosing a data format. Columnar formats like ORC and Parquet offer better performance for processing large datasets, but they may not be as space-efficient as row-based formats like Avro. Therefore, it’s essential to choose a data format that balances performance and storage requirements.

In conclusion, understanding the differences between these data formats can help make an informed decision when selecting a data format for big data processing.

Leave a Reply to Avro vs Parquet: Comparing Two Popular Data Storage Formats – ValueQuench Cancel reply